Log experiements

Machine learning is a very iterative process, algorithm have multiples hyperparameters to keep track of. And the performance of the models evolves as you get more data. In order to manage the model lifecycle, we will use mlflow.

First, import mlflow

import mlflow

Mlflow can be run on the local computer in order to try it out but I recommend deploying it on a server. In our case, the server is located on the local network at 192.168.1.5:4444. The mlflow client can connect to it via the set_tracking_url method

mlflow.set_tracking_uri("http://192.168.1.5:4444")

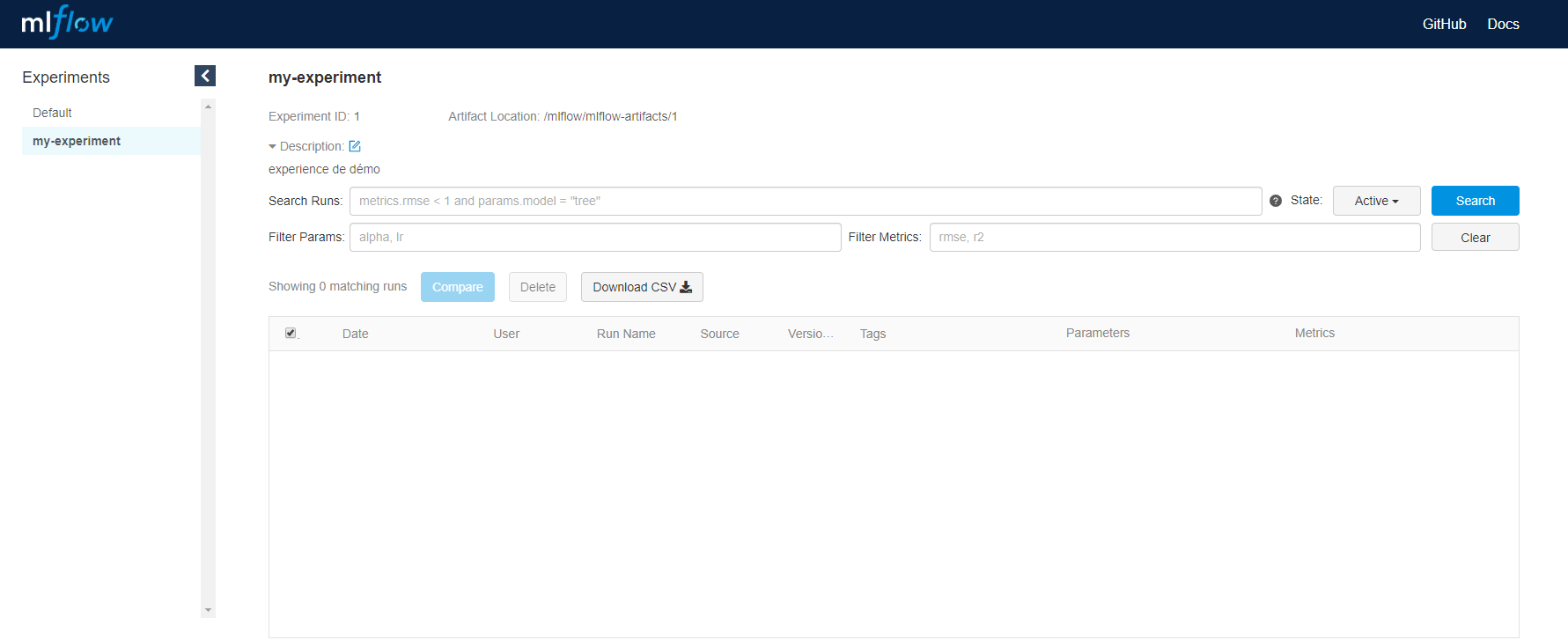

Mlflow can be used to record and query experiements : code, data, config, results... Let's specify that we are working on my-experiment with the method set_experiment. If the experiement does not exist, it will be created.

mlflow.set_experiment("my-experiment")

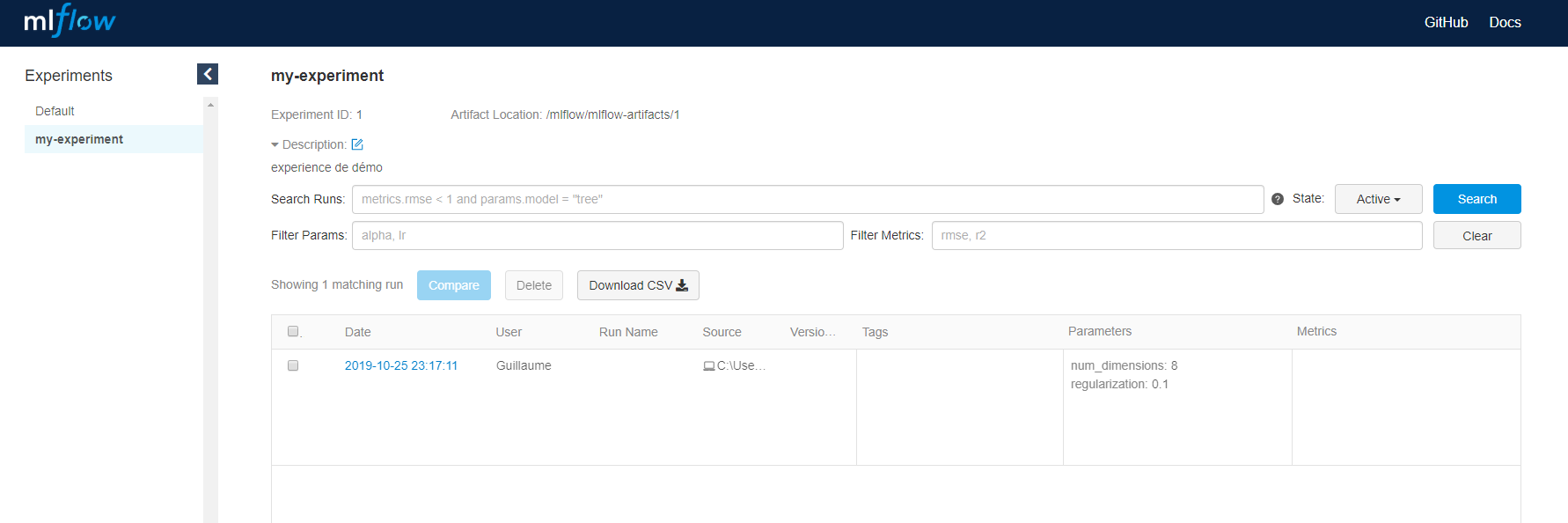

mlflow.log_param("num_dimensions", 8)

mlflow.log_param("regularization", 0.1)

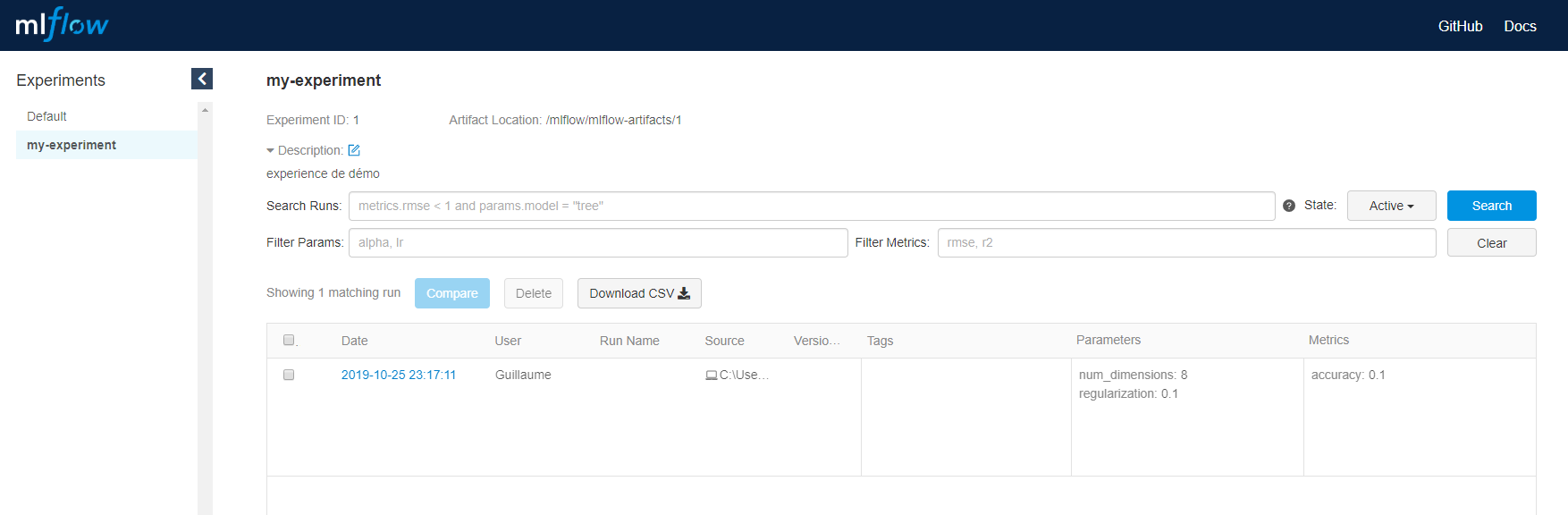

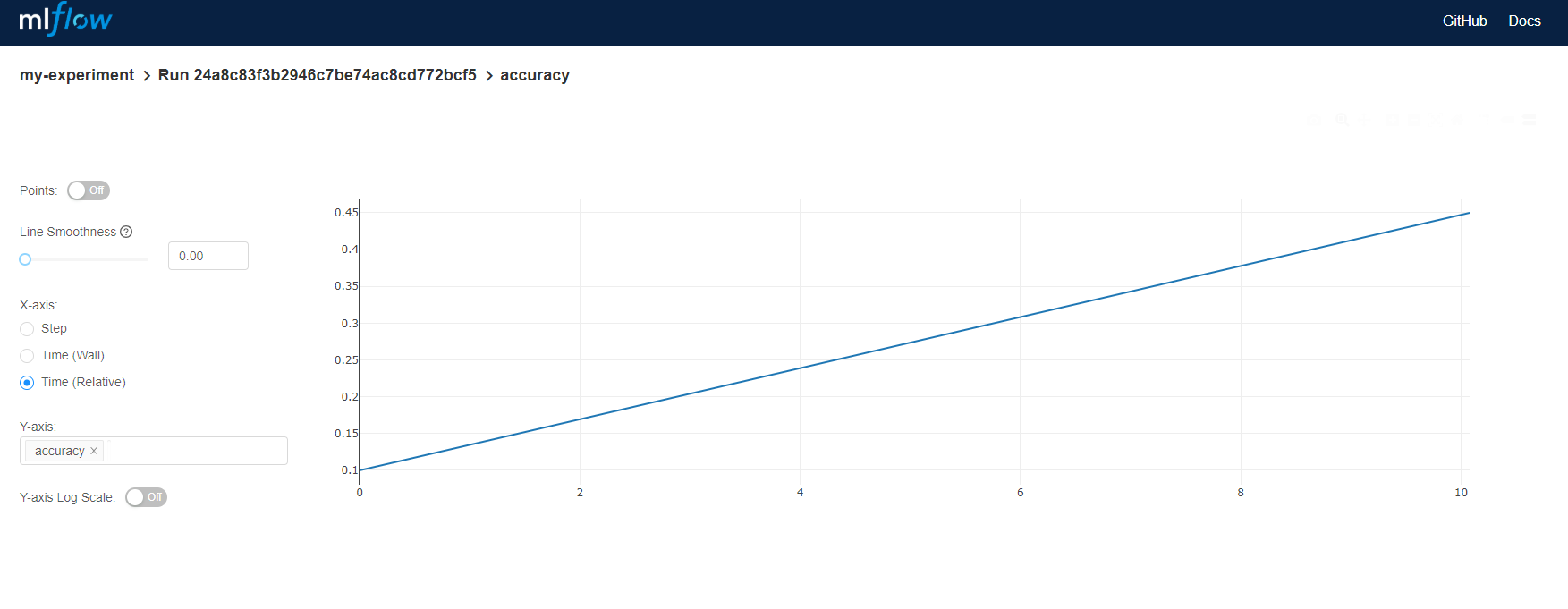

Metrics can be logged as well in mlflow, just use the log_metric method.

mlflow.log_metric("accuracy", 0.1)

mlflow.log_metric("accuracy", 0.45)

Metrics can be updated at a later time. The changes will be tracked across versions.

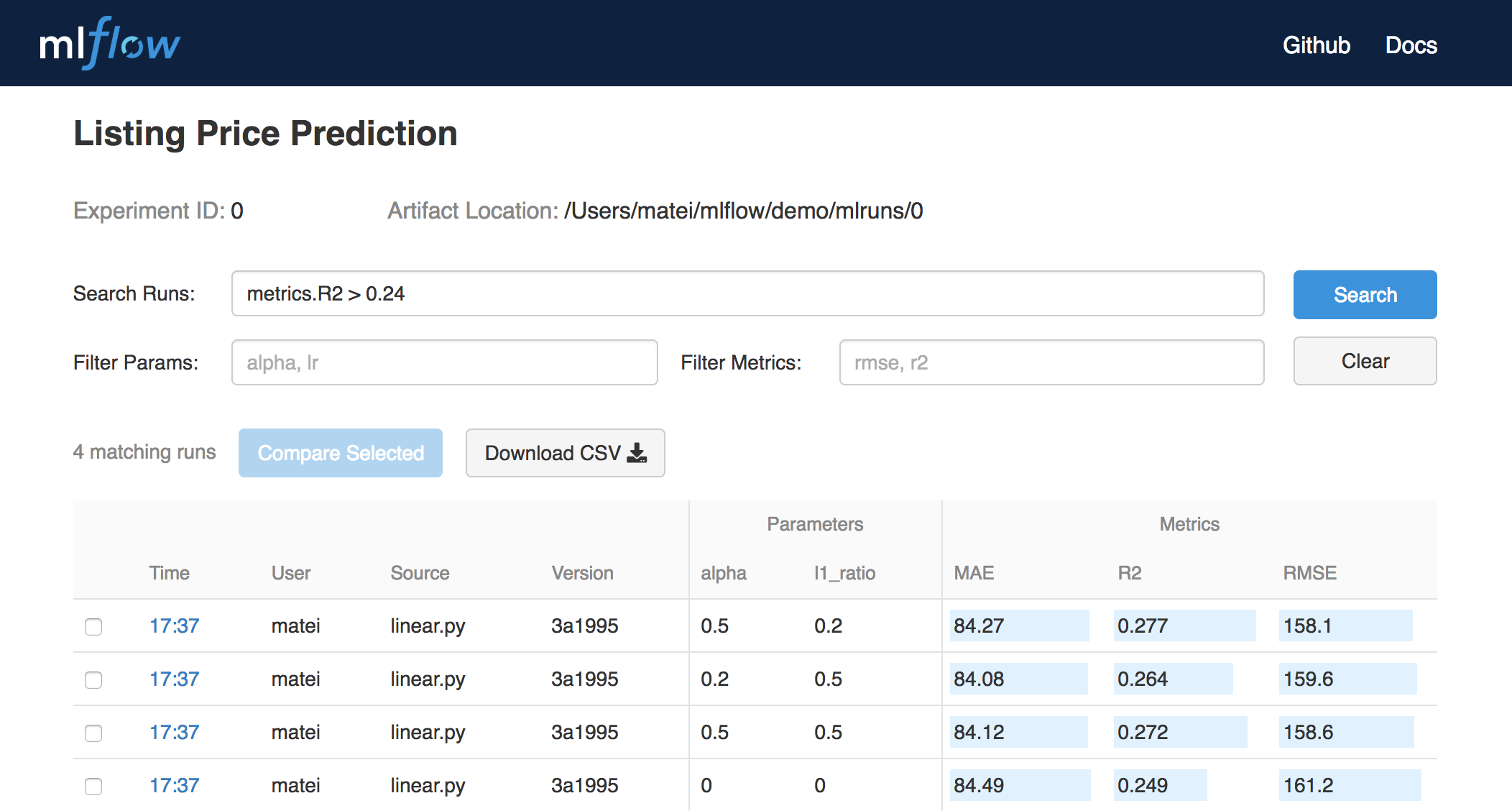

You can use MLflow Tracking in any environment (for example, a standalone script or a notebook) to log results to local files or to a server, then compare multiple runs. Using the web UI, you can view and compare the output of multiple runs. Teams can also use the tools to compare results from different users: